Image Classification & Regression

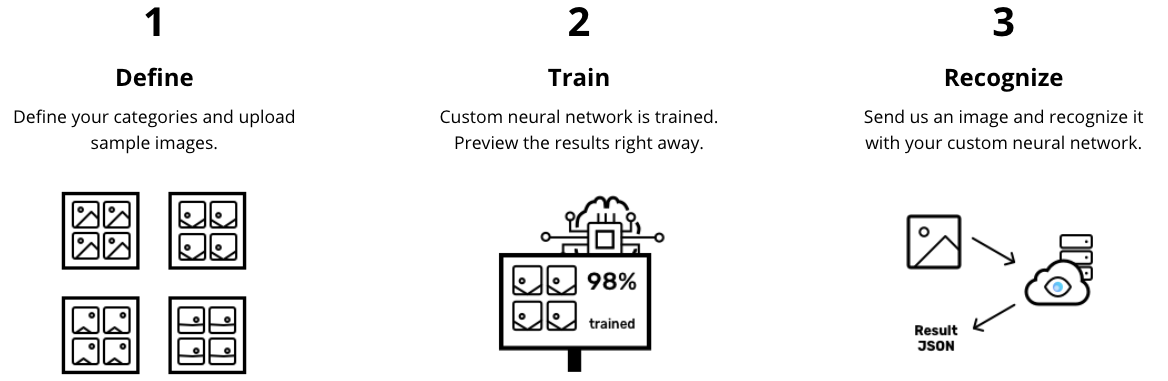

Image Classification & Regression service (previously vize.ai) provides custom image recognition API to train models & recognize, classify images or predict/regress continuous values from images. It allows you to implement state-of-the-art artificial intelligence into your project. We provide user interface for simple set up of your task (neural network definition), access to manage an account, upload images, train new models and evaluating result. After an easy setup, you get results over API and you are ready to build this functionality in your application. It’s easy, quick and highly scalable.

Tutorial for newcomers

If you don't know how to train your image recognition model then read our step by step tutorial at our blog. We have also video tutorial on youtube.

Python Library

If you are using Python programming language in your project you can query our API with our library. Our library is available on gitlab and through the pypi.

The task is where you start. Each task has a set of labels (categories, tags or value). Task represent abstract definition for training a recognition model. Each label can be assigned to multiple training images. Only you can access your tasks and other data.

Model is the machine learning model. Its a neural network trained on your specific images and thus highly accurate at recognizing new images. Each model has an accuracy measured at the end of the training. Model is private only to its owner. Each retraining creates new entity of model with increased version (integer). So each retraining increases the version of the model by one. You can select a model version that is deployed in production.

Every task is defined by type. Task can be Tagging ('multi_label'), Categorization ('multi_class') or Regression ('regression'). You need to select this type during/when creating the task. Tagging task automatically creates one Negative Label.

Label is a feature you want to recognize on your images. Label must be one of the type: category (Categorization task), tag (Tagging task) or value (Regression task). You must provide training images with this feature and Ximilar learns to recognize it.

Create Categorization task with Categories (Labels) when:

- Categorization makes the assumption that each sample is assigned to one and only one label.

- That means that Labels are mutually exclusive so Image within Recognition Task should (must) have only one Label/Category

- Desired prediction output should have only one right Label/Category

- You always know that when your task/model will be in production, only images with the domain of your labels will be sent. That means if you create task for distinguishing Apples from Bananas, you are not expectings images with Pineapples or Dogs.

Some examples of correct categorization tasks:

- Recognizing fashion brands like Adidas, Nike, Rebook, Puma ... from Images of logos, and only one logo is present on the image

- If you want to recognize Malign vs Benign Cells

- If you want to recognize Cats Vs Dogs Vs Birds

- If you want to recognize Cat Vs Not-A-Cat

- If you want to recognize images with watermark or without

- If you want to recognize Bedroom Vs Bathroom Vs Kitchen Vs Pool

- If you want to recognize Damage Items vs Non-damaged item

- If you want to categorize Color of Dress like Red vs Green vs Blue

Create Tagging task with Tags (Labels) when:

- The labels are not mutually exclusive so Image within the task can have multiple tags (Labels).

- That means multiple tags are relevant for the image and the tags are probably somehow related.

- That means desired prediction output for the image can have multiple ground truth labels.

Some examples of correct tagging tasks:

- If you want to build Tagger for Dresses with tags Red, Green, Blue, Maxi, Midi, Mini, Long Sleeves, Short Sleeves, No Sleeves, ...

- If you want to assign features to Real Estate Images as Indoor, Outdoor, Kitchen, Wooden, Table, Chair, Modern, Fireplace, Hardwood Floor, TV, ...

- If you want to recognize Cat Vs Not-A-Cat (this is possible also in Categorization, here your Not-A-Cat label is represented by negative label)

Be aware that you can create multiple Categorization tasks (one task for recognizing Colors another for Shape and another for Pattern) to simulate behavior of Tagging task.

Create Regression task with Value (Label) when:

- The label should represent numerical value from some range, for example 1-100.

Some examples of correct regression tasks:

- If you want to build model that predicts aesthetics rating of stock photos

- If you want to build model that rates images like real estate photos from range (0-X)

- If you want to predict quality value / or damage of some product

- If you want to estimate the size of some item

Before training of the model, Ximilar Custom Image Recognition service internally split your images into training images (80%) and testing images (20%). Training images are used for training, testing images are used to evaluate the accuracy, precision and recall of the model. The accuracy of the model is a number saying how accurately are your labels recognized. Accuracy 95% means 95 of 100 images will get the right label. Accuracy depends on the number of images uploaded for training and will not be very accurate for a low number of training images.

Here are some tips for training recognition model.

API reference

This is documentation of the Ximilar Image Recognition API. The API follows the general rules of Ximilar API as described in section First steps.

The Image Recognition API is located at https://api.ximilar.com/recognition/. Each API entity (task, label, image) is defined by its unique ID. ID is formatted as a universally unique identifier (UUID) string. You can use IDs from browser URLs to quick access entities over programmatic access. Example of UUID:

0a8c8186-aee8-47c8-9eaf-348103feb14d

Classify endpoint - /v2/classify/

Classify endpoint executes a trained image recognition model on images for getting predictions. The endpoint allows POST method and you can find it in our interactive API reference. You can pass an image in _url or _base64 fields. API endpoint /v2/classify gets JSON-formatted body where you need to specify records to process (up to 10), identification of task and version of your model(optional).

To sum up /v2/classify

- can classify a batch of images (up to 10) at once

- you can optionally specify a version of the model to be used

- you cannot directly send a local image file, you must first convert it to Base64

Parameters:

records: A list of real-life photos to find similar products; each record- must contain either of

_urlor_base64field

- must contain either of

task: UUID identification of your task.version: Optional. Version of the model, default is the active/last version of your model.store_images: Optional, boolean. If set totruethen all images in therecordsare stored to your training images marked with a selected label. IDs of the stored images are returned in each of the record in field_stored_image_id. This feature must be turned on for specific task and is available only for users with Professional pricing plan.

curl -H "Content-Type: application/json" -H "authorization: Token __API_TOKEN__" https://api.ximilar.com/recognition/v2/classify -d '{"task": "0a8c8186-aee8-47c8-9eaf-348103xa214d", "version": 2, "records": [ {"_url": "https://bit.ly/2IymQJv" } ] }'

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

task, status = client.get_task(task_id='__ID_TASK__')

# you can send image in _file, _url or _base64 format

# the _file format is intenally converted to _base64 as rgb image

result = task.classify([{'_url': '__URL_PATH_TO_IMG__'}, {'_file': '__LOCAL_FILE_PATH__'}, {'_base64': '__BASE64_DATA__'}])

# the result is in json/dictionary format and you can access it in following way:

best_label = result['records'][0]['best_label']

The result has similar json structure:

CLICK TO SHOW JSON RESULT

{

"task_id": "185x2019-1182-439c-900b-9f29c3w35926",

"status": {

"code": 200,

"text": "OK"

},

"statistics": {

"processing time": 0.08802390098571777

},

"version": 9,

"records": [

{

"_status": {

"code": 200,

"text": "OK"

},

"best_label": {

"prob": 0.98351,

"name": "dog",

"id": "9d4b5433-add9-46e2-be64-7a928c5f68e8"

},

"labels": [

{

"prob": 0.98351,

"name": "dog",

"id": "9d4b5433-add9-46e2-be64-7a928c5f68e8"

},

{

"prob": 0.01649,

"name": "cat",

"id": "08435c8c-554d-4a25-8702-f57526f1224f"

}

],

"_url": "https://vize.ai/fashion_examples/10.jpg",

"_width": 404,

"_height": 564

}

]

}

Task endpoint - /v2/task/

Task endpoints let you manage tasks in your account. You can list all the tasks, create, delete, and modify created tasks. Until the first task training is successfully finished the production version of the task is -1 and the task cannot be used for classification.

List tasks (returns paginated result):

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/recognition/v2/task/

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

tasks, status = client.get_all_tasks()

Create categorization (multi_class), tagging (multi_label) or regression tasks.

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'name=My new task' -F 'type=multi_class' -F 'description=Demo task' https://api.ximilar.com/recognition/v2/task/

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

classification_task, status = client.create_task('__TASK_NAME__', type="multi_class")

Delete task:

curl -v -XDELETE -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/recognition/v2/task/__TASK_ID__/

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

task, status = client.get_task(task_id='__ID_TASK__')

client.remove_task(task.id)

task.remove()

Label endpoint - /v2/label/

Label endpoints let you manage labels (categories) in your tasks. You manage your labels independently (list them, create, delete, and modify) and then you connect them to your tasks. Each task requires at least two labels for training. Each label must contain at least 20 images.

List all your labels (returns paginated result):

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/recognition/v2/label/

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

labels, status = client.get_all_labels()

List all labels of the task:

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/recognition/v2/label/?task=__TASK_ID__

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

task, status = client.get_task(task_id='__ID_TASK__')

# get all labels which are connected to the task

labels, status = task.get_labels()

for label in labels:

print(label.id, label.name)

Search all labels which contains substring:

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/recognition/v2/label/?search="__SEARCH_QUERY__"

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

labels, status = client.get_labels_by_substring('__LABEL_NAME__')

Create new label (category, tag or value):

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'name=New label' -F 'type=category' https://api.ximilar.com/recognition/v2/label/

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

# creating new label (CATEGORY, which is default)

label, status = client.create_label(name='__NEW_LABEL_NAME__', label_type='category')

Connect a label to your task (category to Categorization task, tag to Tagging task and value to Regression task):

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'label_id=__LABEL_ID__' https://api.ximilar.com/recognition/v2/task/__TASK_ID__/add-label

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

label, status = client.get_label("__SOME_LABEL_ID__")

task.add_label(label.id)

Remove a label from your task:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'label_id=__LABEL_ID__' https://api.ximilar.com/recognition/v2/task/__TASK_ID__/remove-label

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

label, status = client.get_label("__SOME_LABEL_ID__")

# detaching (not deleting) existing label from existing task

task.detach_label(label.id)

# remove label (which also detach label from all tasks)

client.remove_label(label.id)

Training image endpoint - /v2/training-image/

Training image endpoint let you upload training images and add labels to these images. You can list training images, create, delete, modify created images. Because Ximilar Image Recognition will soon allow multi-label classification, the API allows to add more than one label to each training image.

Upload training image (file or base64):

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'img_path=@__FILE__;type=image/jpeg' https://api.ximilar.com/recognition/v2/training-image/

curl --request POST --url https://api.ximilar.com/recognition/v2/training-image/ --header 'authorization: Token API_TOKEN' --header 'content-type: application/json' --data '{"meta_data": {"field": "key"}, "noresize": false, "base64": "BASE64"}'

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

images, status = client.upload_images([{'_url': '__URL_PATH_TO_IMAGE__', 'labels': [], "meta_data": {"field": "key"}},

{'_file': '__LOCAL_FILE_PATH__', 'labels': []},

{'_base64': '__BASE64_DATA__', 'labels': []}])

# Upload image without resizing it (for example Custom Object Detection requires high resolution images):

images, status = client.upload_images([{'_url': '__URL_PATH_TO_IMAGE__', "noresize": True}])

Add label (category, tag) to a training image:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'label_id=__LABEL_ID__' https://api.ximilar.com/recognition/v2/training-image/__IMAGE_ID__/add-label

images[0].add_label("__SOME_LABEL_ID__")

Add value (regression label) to a training image:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'label_id=__LABEL_ID__' -F 'value=__VALUE__' https://api.ximilar.com/recognition/v2/training-image/__IMAGE_ID__/add-label

images[0].add_label("__SOME_LABEL_ID__", value=VALUE)

Update value (regression label) of a training image:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'label_id=__LABEL_ID__' -F 'value=__VALUE__' https://api.ximilar.com/recognition/v2/training-image/__IMAGE_ID__/update-value

images[0].update_label("__SOME_LABEL_ID__", value=VALUE)

Remove label (category, tag, value) from a training image:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'label_id=__LABEL_ID__' https://api.ximilar.com/recognition/v2/training-image/__IMAGE_ID__/remove-label

label, status = client.get_label("__SOME_LABEL_ID__")

images[0].detach_label(label.id)

Get all images of given label (returns paginated result):

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/recognition/v2/training-image/?label=__LABEL_ID__

label, status = client.get_label("__SOME_LABEL_ID__")

images, next_page, status = label.get_training_images()

while images:

for image in images:

print(str(image.id))

if not next_page:

break

images, next_page, status = label.get_training_images(next_page)

Training — /v2/task/TASK_ID/train/

Use training endpoint to start a model training. It takes few minutes up to few hours to train a model depending on the number of images in your training collection. You are notified about the start and the finish of the training by email.

Start training:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/recognition/v2/task/__TASK_ID__/train/

from ximilar.client import RecognitionClient

client = RecognitionClient(token="__API_TOKEN__")

task, status = client.get_task(task_id='__ID_TASK__')

task.train()