Object Detection

Ximilar Custom Object Detection service provides a trainable custom detection API to detect objects (bounding boxes) on the image. It allows you to implement state-of-the-art artificial intelligence into your project. We provide a user interface for simple set up of your task, access to manage an account, upload images, train new models and evaluating result. After an easy setup, you get results over API and you are ready to build this functionality in your application. It’s easy, quick and highly scalable.

Tutorial for newcomers

If you don't know how to train your custom object detection model then read our step by step tutorial at our blog. We have also video tutorial on youtube.

Python Library

If you are using Python programming language in your project you can query our API with our library. Our library is available on gitlab and through the pypi.

The task is where you start. Task represent abstract definition for training a detection model. Each task has a set of detection labels. Only you can access your tasks and other data.

Model is the machine learning model behind your image detection API. Its a neural network trained on your specific images and thus highly accurate at recognizing new images. Each model has an accuracy measured at the end of the training. Model is private only to its owner. Each retraining increases the version of the model by one and you can select a model version that is deployed.

Label (category) is a feature you want to detect on your images. Label defines detection objects/bounding boxes that are created on images.

Every detection/bounding box on the training image is represented by Object entity.

- Every object is located on some training-image

- is some type of detection

Label - is represented by the bounding box with four coordinates [xmin, ymin, xmax, ymax] on the image

You must provide training images through the recognition api endpoint /recognition.

Create Detection task with Labels when:

- You need to know exact location of the object you want to detect

Some examples of correct Detection tasks:

- Detecting exact position of Logo on the Image

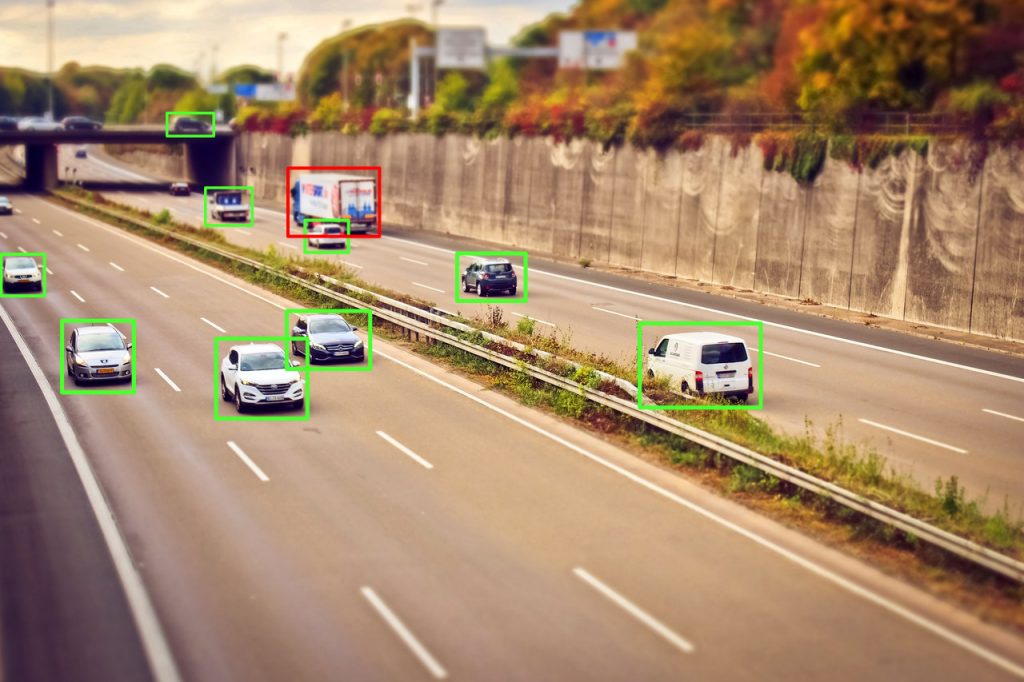

- Detecting positions/bounding boxes of cars, traffic signs, persons, face

- Detecting damages on the blades of Wind Power Plants

- Detecting and counting ships in harbor with your Drones or Satellite Imagery

Before training of the model, Ximilar Custom Object Detection service internally split your images into training images (80%) and testing images (20%). Training images are used for training, testing images are used to evaluate the accuracy, precision and recall of the model. The accuracy of the model is a number saying how accurately are your labels recognized. Accuracy 95% means 95 of 100 images will get the right label. Accuracy depends on the number of images uploaded for training and will not be very accurate for a low number of training images.

Here are some tips for training recognition model.

API reference

This is documentation of the Ximilar Custom Detection API. The API follows the general rules of Ximilar API as described in Section First steps.

The Custom Detection API is located at https://api.ximilar.com/detection/. Only Image endpoint is located at https://api.ximilar.com/recognition/. Each API entity (task, label, object) is defined by its ID. ID is formatted as a universally unique identifier (UUID) string. You can use IDs from browser URLs to quick access entities over programmatic access. Example of UUID:

0a8c8186-aee8-47c8-9eaf-348103feb14d

Detect endpoint - /v2/detect/

Detect endpoint executes an image detection and it is the main endpoint of entire detection system. It allows POST method and you can find it in our interactive API reference. You can pass an image in _url or _base64 fields. API endpoint /v2/detect gets JSON-formatted body where you need to specify records to process (up to 10), identification of task and version of your model(optional). The detect API endpoint returns only objects with predicted probability larger than 30 % (see keep_prob param).

To sum up /v2/detect

- can detect a batch of images (up to 10) at once

- you can optionally specify a version of the model to be used

Parameters:

records: A list of real-life photos to find similar products; each record- must contain either of

_urlor_base64field

- must contain either of

task: UUID identification of your task.version: Optional. Version of the model, default is the active/last version of your model.keep_prob: Optional. Specify value in (0.0-1.0). Default threshold probability is 30 % (0.3).

curl -H "Content-Type: application/json" -H "authorization: Token __API_TOKEN__" https://api.ximilar.com/detection/v2/detect -d '{"task_id": "0a8c8186-aee8-47c8-9eaf-348103xa214d", "version": 2, "descriptor": 0, "records": [ {"_url": "https://bit.ly/2IymQJv" } ] }'

from ximilar.client import DetectionClient

# get Detection Task

client = DetectionClient("__API_TOKEN__")

detection_task, status = client.get_task("__DETECTION_TASK_ID__")

# Getting Detection Result:

result = detection_task.detect([{"_url": "__URL_PATH_TO_IMAGE__"}])

The result has similar json structure:

CLICK TO SHOW JSON RESULT

{

"task_id": "__TASK_ID__",

"records": [

{

"_url": "__SOME_URL__",

"_status": {

"code": 200,

"text": "OK"

},

"_width": 2736,

"_height": 3648,

"_objects": [

{

"name": "Person",

"id": "b9124bed-5192-47b8-beb7-3eca7026fe14",

"bound_box": [

2103,

467,

2694,

883

],

"prob": 0.9890862107276917

},

{

"name": "Car",

"id": "b9134c4d-5062-47b8-bcb7-3eca7226fa14",

"bound_box": [

100,

100,

500,

883

],

"prob": 0.9890862107276917

}

]

}

]

}

Task endpoint - /v2/task/

Task endpoints let you manage tasks in your account. You can list all the tasks, create, delete, and modify created tasks. Until the first task training is successfully finished the production version of the task is -1 and the task cannot be used for detection.

List tasks (returns paginated result):

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/detection/v2/task/

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

tasks, status = client.get_all_tasks()

Create task:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'name=My new task' -F 'description=Demo task' https://api.ximilar.com/detection/v2/task/

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

task, status = client.create_task("__DETECTION_TASK_NAME__")

task, status = client.get_task(task.id)

Delete task:

curl -v -XDELETE -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/detection/v2/task/__TASK_ID__/

from ximilar.client import DetectionClient

# get Detection Task

client = DetectionClient("__API_TOKEN__")

task, status = client.get_task("__DETECTION_TASK_ID__")

task.remove()

Label endpoint - /v2/label/

Label endpoints let you manage labels (categories) in your tasks. You manage your labels independently (list them, create, delete, and modify) and then you connect them to your tasks. Each task requires at least two labels for training. Each label must contain at least 20 images.

List all your labels (returns paginated result):

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/detection/v2/label/

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

labels, status = client.get_all_labels()

List all labels of the task:

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/detection/v2/label/?task=__TASK_ID__

from ximilar.client import DetectionClient

# get Detection Task

client = DetectionClient("__API_TOKEN__")

task, status = client.get_task("__DETECTION_TASK_ID__")

# get all labels which are connected to the task

labels, status = task.get_labels()

for label in labels:

print(label.id, label.name)

Create new label:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'name=New label' https://api.ximilar.com/detection/v2/label/

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

detection_label, status = client.create_label(name='__NEW_LABEL_NAME__')

Connect a label to your task:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'label_id=__LABEL_ID__' https://api.ximilar.com/detection/v2/task/__TASK_ID__/add-label

from ximilar.client import DetectionClient

# get Detection Task

client = DetectionClient("__API_TOKEN__")

task, status = client.get_task("__DETECTION_TASK_ID__")

label, status = client.get_label("__DETECTION_LABEL_ID__")

task.add_label(label.id)

Detach a label from your task:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -F 'label_id=__LABEL_ID__' https://api.ximilar.com/detection/v2/task/__TASK_ID__/remove-label

from ximilar.client import DetectionClient

# get Detection Task

client = DetectionClient("__API_TOKEN__")

task, status = client.get_task("__DETECTION_TASK_ID__")

# detaching (not deleting) existing label from existing task

label, status = client.get_label("__DETECTION_LABEL_ID__")

task.detach_label(label.id)

Delete label:

curl -v -XDELETE -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/detection/v2/label/__LABEL_ID__/

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

client.remove_label("__DETECTION_LABEL_ID__")

# another option

label, status = client.get_label("__DETECTION_LABEL_ID__")

label.remove()

Training image endpoint

To see how to work with the training image endpoint consult with the corresponding section in Recognition section.

Object /v2/object

Use object endpoint to work with objects and bounding boxes on the images.

Create Objects/Bounding box annotations of some type (Label) on the images

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' -H Content-Type:application/json --data '{"detection_label": "__LABEL_ID__", "image": "__IMAGE_ID__", "data": [xmin, ymin, xmax, ymax] }' https://api.ximilar.com/detection/v2/object

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

d_object, status = client.create_object("__DETECTION_LABEL_ID__", "__IMAGE_ID__", [xmin, ymin, xmax, ymax])

d_object, status = client.get_object(d_object.id)

Get all objects of image:

curl -v -XGET -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/detection/v2/object/?image=__IMAGE_ID__

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

d_objects, status = client.get_objects_of_image("__IMAGE_ID__")

for object in objects:

print(object.id, object.detection_label)

print(object.data)

Delete object:

curl -v -XDELETE -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/detection/v2/object/__OBJECT_ID__/

from ximilar.client import DetectionClient

client = DetectionClient("__API_TOKEN__")

client.remove_object("__DETECTION_OBJECT_ID__")

# another way

object1, status = client.get_object("__DETECTION_OBJECT_ID__")

object1.remove()

Training — /v2/task/TASK_ID/train/

Use training endpoint to start a model training. It takes few minutes up to few hours to train a model depending on the number of images in your training collection. You are notified about the start and the finish of the training by email.

Start training:

curl -v -XPOST -H 'Authorization: Token __API_TOKEN__' https://api.ximilar.com/detection/v2/task/__TASK_ID__/train/

from ximilar.client import DetectionClient

# get Detection Task

client = DetectionClient("__API_TOKEN__")

task, status = client.get_task("__DETECTION_TASK_ID__")

task.train()